Oligo Accelerates Vulnerability Intelligence with NVIDIA AI: Enabling Real-Time Cybersecurity at Scale

TL;DR

- 55X cost reduction and 2.5X faster response times in real-time detection pipelines by deploying NVIDIA Nemotron Nano 9B v2 via Amazon Bedrock - with no loss in accuracy compared to Claude 4.5 Sonnet.

- We reached 4X faster end‑to‑end CVE enrichment throughput after moving inference to NVIDIA Nemotron instruction-tuned models, optimized for our NER (Named Entity Recognition) task.

- ~20% more CVEs covered by integrating preprocessing from NVIDIA AI Blueprint for Vulnerability Analysis, which improved our pipeline’s context.

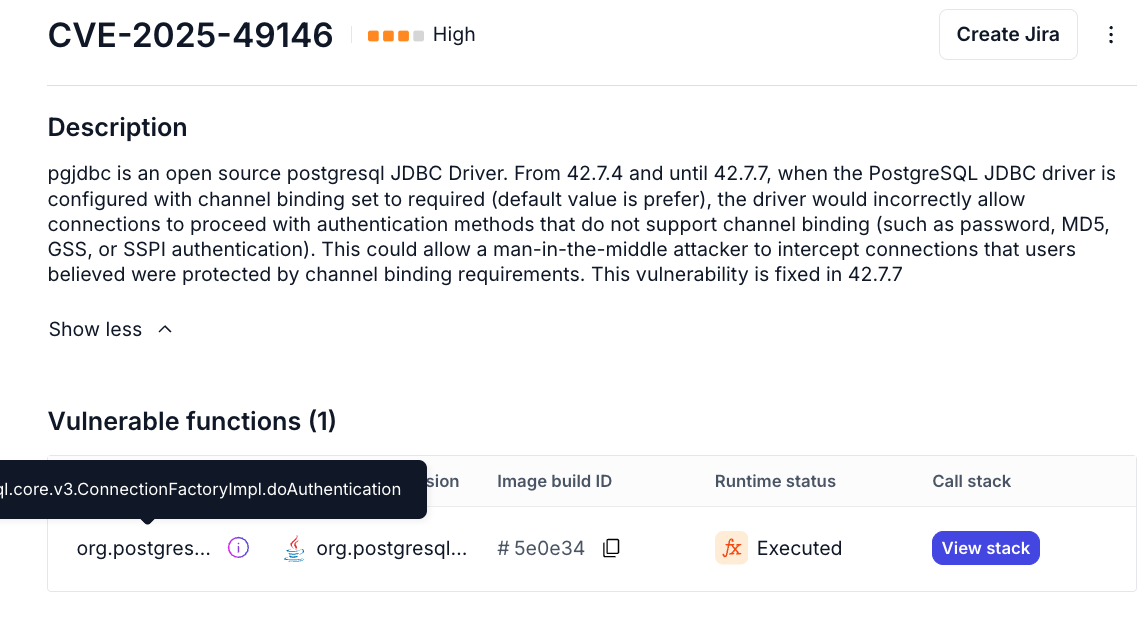

- 1100% more vulnerable functions identified vs. commercial CVE Advisories.

- 4X cost reduction for the enrichment pipeline, while enabling real-time CVE enrichment seconds after publication.

The breakthrough wasn’t a bigger general‑purpose LLM. It was an expert‑tuned pipeline - built on NVIDIA acceleration - feeding the model the right context at the right time in a way that can scale cost-efficiently.

The Challenge: Vulnerability Intelligence at Scale

Security teams today face a critical challenge: identifying which vulnerabilities actually impact their environment and require immediate attention. Traditional vulnerability management approaches rely on generic severity scores that lack the context needed for effective prioritization.

The missing piece? Identifying the specific vulnerable functions within affected code.

This is especially difficult because:

- Cybersecurity data is massive - runtime environments generate petabytes of telemetry and call stacks.

- Context grows quickly - vulnerability analysis often requires combining advisory data, runtime traces, dependency graphs, and patch diffs into a single model input.

- Complexity is high - LLM models must handle nuanced, domain-specific patterns (such as hexadecimal or obfuscated strings) while still reasoning about “basic cybersecurity facts.”

- General-purpose LLMs fall short - they generalize well and are usually the starting point in “making things work” as the first phase. They are costly and not tuned for security tasks. In many cases, smaller instruction-tuned models outperform larger ones when optimized for cybersecurity workloads.

Without the right models and without the right context, enrichment pipelines become expensive, slow, and incomplete.

The Impact: Security Blind Spots and Resource Waste

Without function-level vulnerability intelligence, organizations face two major problems:

- False Positives - Security teams wasted resources chasing vulnerabilities that don’t actually impact deployed applications (usually results of code scanning without runtime context). The dependency is present (installed) in runtime, but never actually used, so fixing the vulnerability will not improve the application security posture.

- False Negatives - Dangerous blind spots when critical vulnerabilities remain undetected because teams cannot confirm if vulnerable code paths are in use, assuming some functions are not executed based on code scanning and reachability. These assumptions are dangerous and provide a false sense of safety.

Oligo’s research showed that traditional vulnerability databases cover less than 4% of vulnerabilities at the function level. This massive intelligence gap leaves teams flying blind, unable to prioritize effectively.

The Solution: Vulnerability Intelligence Powered by NVIDIA AI

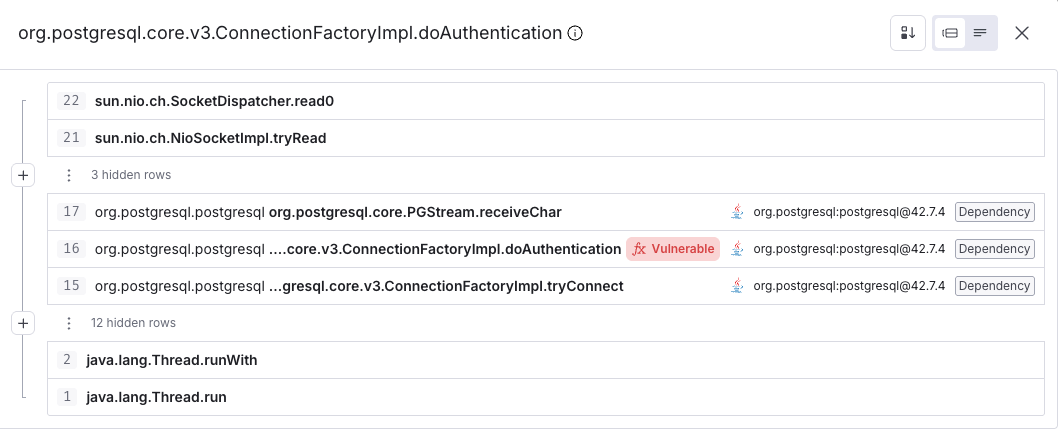

To close this gap, Oligo built a Named Entity Recognition (NER) pipeline that extracts vulnerable functions directly from advisories and patches, and validates them based on runtime data.

The first iteration, powered by Amazon Bedrock and Claude 3.5 Sonnet, showed promise, but suffered from high costs, limited throughput, and gaps in coverage - such as when a given CVE data is distributed between several advisories.

Deploying Nemotron models through Amazon Bedrock allowed us to move rapidly from evaluation to production while maintaining consistent security and operational controls.

We transformed the pipeline to use the following components from NVIDIA:

- NVIDIA Nemotron NAS-based model - For reasoning-heavy enrichment tasks, we replaced Claude 3.5 Sonnet with NVIDIA llama-3.1-nemotron-70b-instruct, deployed on cloud GPUs. This model class is used for tasks that require deeper context and higher recall.

- Right-Sized Models for Real-Time Detection - Not every security task requires a large model. For latency-sensitive and high-volume detection logic, we deployed NVIDIA Nemotron Nano 9B v2 in production via Amazon Bedrock. Despite being significantly smaller, Nemotron 9B v2 achieved the same accuracy on our detection tasks, while delivering 2.5X faster response times and 55X lower inference cost compared to Claude Sonnet 4.5. This made real-time, always-on detection economically viable at scale for all customers.

- NVIDIA AI Blueprint for Vulnerability Analysis - We integrated preprocessing code from the NVIDIA AI Blueprint for Vulnerability Analysis, enriching context with GitHub Security Advisories (GHSA) out of the box for CVEs. This greatly increased the coverage for open source projects that manage security advisories via GHSA.

- End-to-End Optimization - By combining inference acceleration, model right-sizing, and preprocessing, we built a pipeline that is both faster and more accurate - while dramatically lowering cost and increasing our unique knowledge about CVEs by 11X.

- Evaluation of results and Supporting Evidence - we cross-references the inferred (“suggested”) functions names, which are the output of our pipeline. We check whether such function: (a) exists in that version and (b) seen running in the customer environment. When we see the function executed in runtime inside a production application, we know the vulnerability risk is real and deserves attention.

Together, these components form a tiered, production-grade AI pipeline - using larger Nemotron models where deep reasoning is required, and smaller, optimized models where real-time speed and cost efficiency matter most.

The Results: Transformative Improvements

The NVIDIA-powered pipeline delivered measurable, production-ready results:

- 4X Faster Processing - Using the optimized model inference enabled real-time CVE enrichment with higher throughput that enabled us to scale the solution.

- 20% More CVEs Covered - Improved preprocessing expanded coverage, especially for GitHub-managed vulnerabilities.

- Enhanced Reasoning - Outputs included richer details like specific commits and line references, with higher confidence, so we can explain the model’s choice and thinking phases. This is evidence for our customers.

- Cost Reduction & Efficiency - We reduced compute requirements by 4X while maintaining accuracy. Our NER pipeline now covers an entire year of CVEs (50K) in less than 1 hour for a fraction of the cost. The new pipeline allows easy experimentation, regression tests, and asking general questions on a bunch of CVEs on demand.

- Real-Time Capability - CVE enrichment is now available within seconds of publication, enabling rapid security response.

- Oligo’s pipeline, powered by NVIDIA, now identifies 1100% more vulnerable functions than commercial databases, with NVIDIA preprocessing code resulting in additional 20% coverage for CVEs.

Beyond Traditional Security AI

Historically, AI for cybersecurity has been “boring”: anomaly detection, log scanning, and basic classification. Large language models didn’t change that - general-purpose LLMs added cost, but not real-world value.

Oligo took a different path:

- We built a NER-based pipeline optimized for vulnerability enrichment.

- We proved that specialized instruction-tuned models can outperform expensive general-purpose LLMs in task accuracy and cost efficiency, and still be real-time.

- We achieved scale - covering petabytes of runtime security data and enriching hundreds of thousands of CVEs - without sacrificing on our main KPIs (cost, latency, and accuracy).

This approach unlocked actionable, function-level vulnerability intelligence that directly informs remediation decisions - something anomaly detection alone could never deliver.

Looking Forward

Our integration of NVIDIA Nemotron has proven that AI can deliver real-time, actionable cybersecurity intelligence at scale - not just faster, but smarter and more cost-effective.

For security teams, this means:

- Knowing what to fix first.

- Reducing risk while optimizing resources.

- Closing the intelligence gap left by traditional vulnerability databases.

And this is just the start. Beyond CVE enrichment, Oligo is applying NVIDIA technology to malicious command detection, incident summarization, and remediation recommendations - expanding the frontier of cybersecurity AI. Oligo is a proud member of the NVIDIA Inception, a global program for startups providing technical support, preferred pricing on NVIDIA solutions, and connections with potential customers and investors.

Oligo is showing that the future of cybersecurity isn’t anomaly detection or logs classification - its AI tailor-made pipelines that deliver real-time, precise, and actionable intelligence.

.svg)