Critical vulnerabilities in AI inference servers impact Meta, NVIDIA, Microsoft, vLLM, SGLang, and Modular projects.

In the race to make the adoption of AI faster, it is necessary to make AI infrastructure safer.

Over the past year, Oligo Security’s research team disclosed a series of critical Remote Code Execution (RCE) vulnerabilities lurking inside some of the most widely used AI inference servers, including frameworks from Meta, NVIDIA, Microsoft, and PyTorch projects like vLLM and SGLang.

These vulnerabilities all traced back to the same root cause: the overlooked unsafe use of ZeroMQ (ZMQ) and Python’s pickle deserialization. But what surprised us the most wasn’t the bug itself. It was how it spread.

As we dug deeper, we found that code files were copied between projects (sometimes line-for-line) carrying dangerous patterns from one repository to the next.

We call this pattern ShadowMQ: a hidden communication-layer flaw propagated through code reuse in the modern AI stack.

The Discovery

It started, as all of our investigations do, with curiosity.

In 2024, while analyzing Meta’s Llama Stack, we noticed an odd pattern: the use of ZMQ’s recv_pyobj() method – a convenience function that deserializes incoming data using Python’s pickle module.

If you’ve worked with Python, you know pickle isn’t designed for security. It can execute arbitrary code during deserialization, which is fine in a tightly controlled environment, but far from fine if exposed over the network.

Meta’s Llama Stack was doing exactly that. Unauthenticated ZMQ sockets, deserializing untrusted data, using pickle. That’s a recipe for remote code execution.

def recv_pyobj(self, flags: int = 0) -> Any:

msg = self.recv(flags)

return self._deserialize(msg, pickle.loads)

We reported the issue to Meta, which quickly issued a patch (CVE-2024-50050) that replaced pickle with a safe JSON-based serialization mechanism. But we had a feeling this wasn’t an isolated problem.

The Pattern Emerges

As we scanned other inference frameworks, we noticed the same problem across the major ones.

NVIDIA’s TensorRT-LLM, Pytorch projects vLLM and SGLang, and even the Modular Max Server - all contained nearly identical unsafe patterns: pickle deserialization over unauthenticated ZMQ TCP sockets.

Different maintainers and projects maintained by different companies – all made the same mistake.

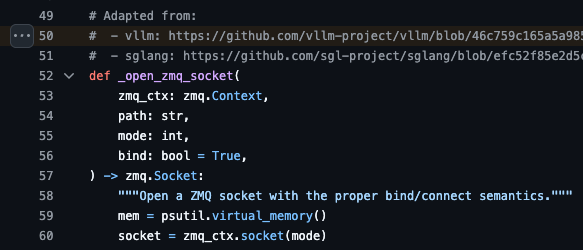

When we traced the code lineage, the reason became obvious: code reuse and, in some cases, direct copy-paste of code. Entire files were being adapted from one project to another, with minimal changes. Sometimes the header comment even said so.

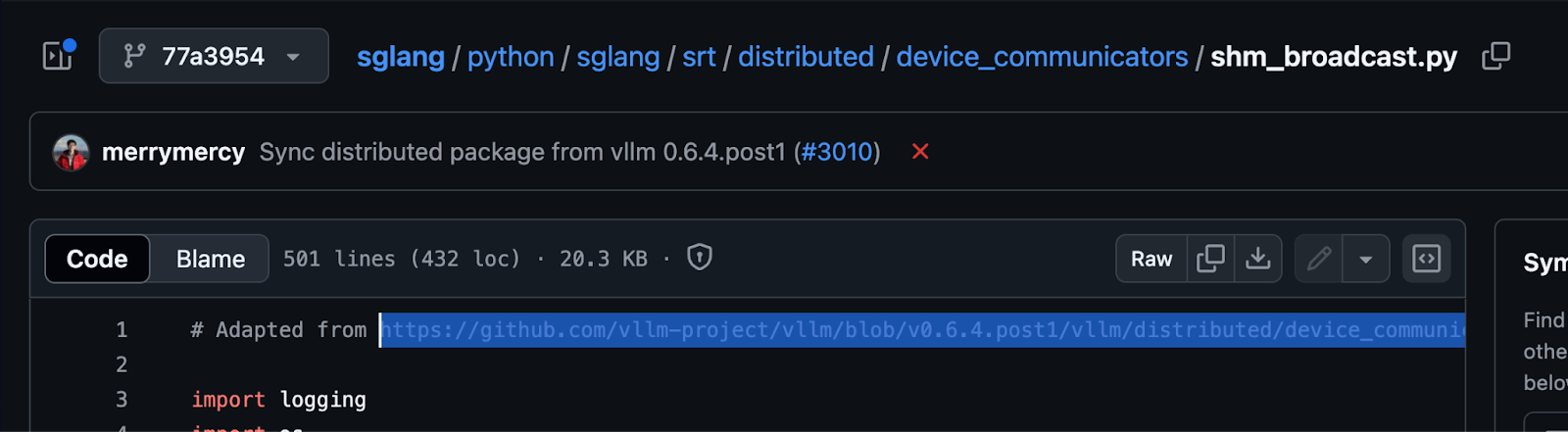

In SGLang, for example, the vulnerable file literally begins with: “Adapted from vLLM”.

That “adaptation” included not just architecture and performance optimizations, but also the exact same insecure deserialization logic that enabled RCE in vLLM, which was disclosed as CVE-2025-30165 by Oligo in May 2025.

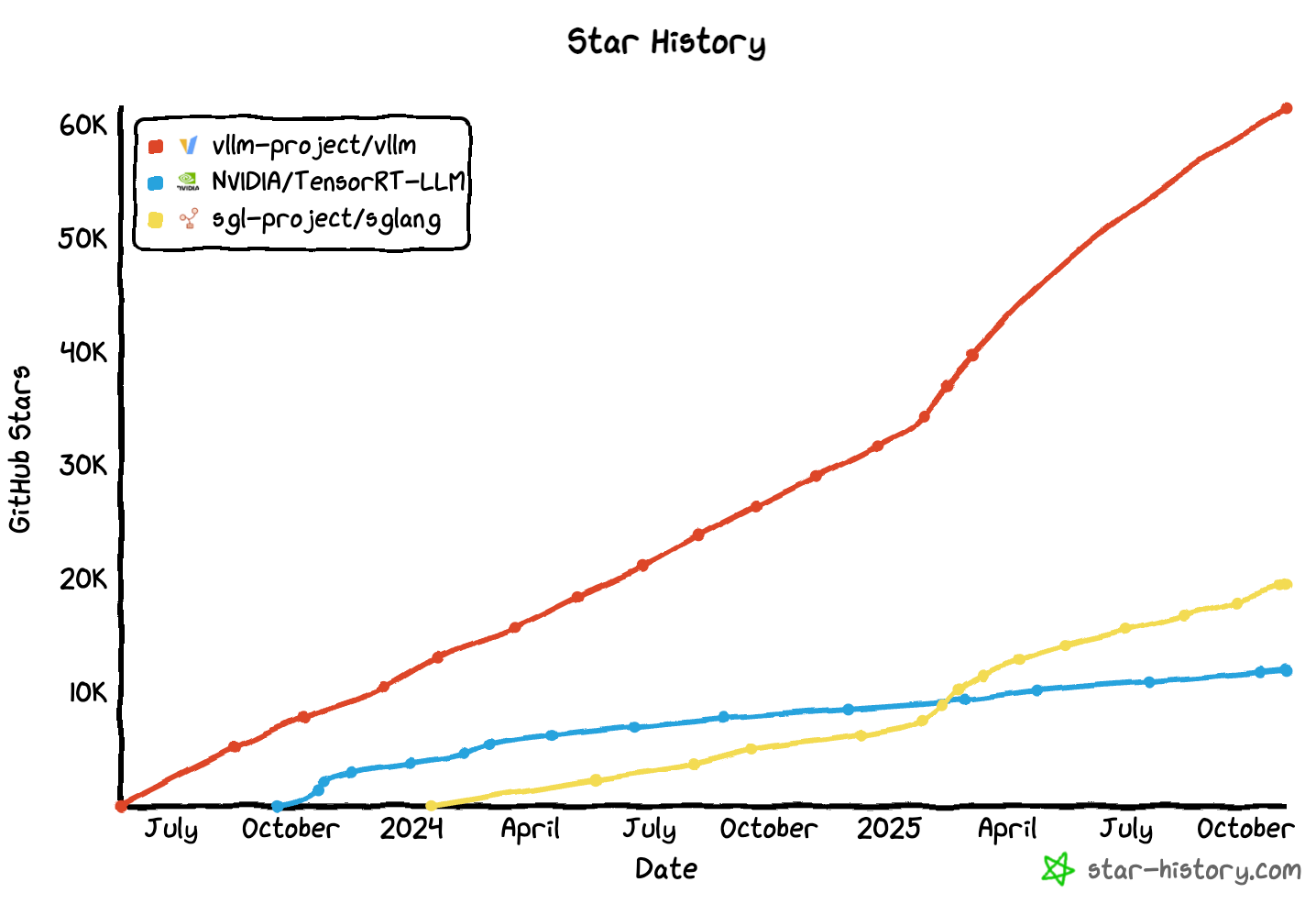

To understand the potential impact of the unpatched flaw, consider the growing list of companies that trust and have adopted SGLang according to the framework’s GitHub: xAI, AMD, NVIDIA, Intel, LinkedIn, Cursor, Oracle Cloud, Google Cloud, Microsoft Azure, AWS, Atlas Cloud, Voltage Park, Nebius, DataCrunch, Novita, InnoMatrix, MIT, UCLA, the University of Washington, Stanford, UC Berkeley, Tsinghua University, Jam & Tea Studios, Baseten, and other major technology organizations across North America and Asia.

The same pattern appeared again in Modular’s Max Server, which borrowed logic from both vLLM and SGLang.

This is how ShadowMQ spread: a security flaw copied and inherited, causing it to be replicated across repositories due to the fact that the maintainers borrowed functionality directly from other frameworks.

A Widespread, Shared Risk

What makes ShadowMQ especially dangerous is that these AI inference servers form the backbone of enterprise AI infrastructure.

They run critical workloads, often across clusters of GPU servers, and process highly sensitive data, from model prompts to customer information.

Exploiting a single vulnerable node could allow attackers to:

- Execute arbitrary code on the cluster

- Escalate privileges to other internal systems

- Exfiltrate model data or secrets

- Install GPU-based cryptominers for profit, like the ShadowRay campaign

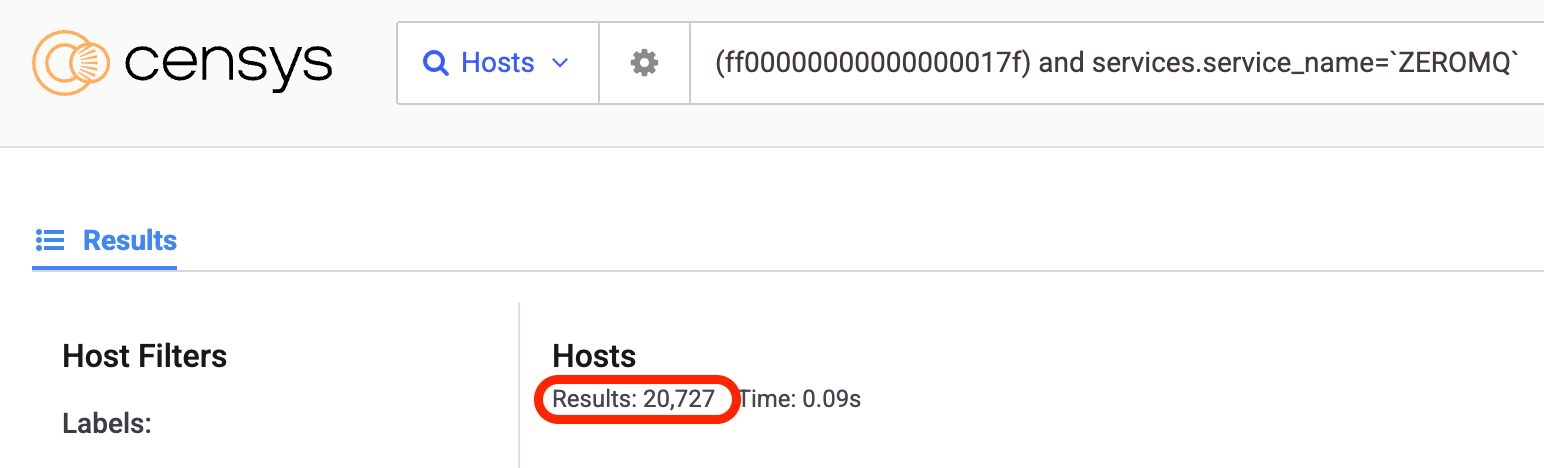

We identified thousands of exposed ZMQ sockets communicating unencrypted over the public internet, some clearly belonging to production inference servers. In these setups, one wrong deserialization call can expose an entire AI operation.

We dove into the ZMQ TCP handshake. A successful TCP banner that would flag a ZMQ socket always contains the following bytes in the TCP data:

By searching for the ZMQ banner that is unique to its TCP handshake, as we thought, there are thousands of ZMQ TCP sockets exposed to the world.

The Disclosure and Vendor Response

Here’s how the timeline unfolded:

- October 2024: Meta Llama Stack (CVE-2024-50050) – Unsafe pickle usage patched with JSON-based serialization.

- May 2025: vLLM (CVE-2025-30165) – Critical RCE fixed by replacing the vulnerable V0 engine with a safe V1 default.

- May 2025: NVIDIA TensorRT-LLM (CVE-2025-23254) – Implemented HMAC validation after Oligo’s report. Rated Critical (9.3) by NVD.

- June 2025: Modular Max Server (CVE-2025-60455) – Patched quickly using msgpack instead of pickle.

Some projects, however, did not patch.

Microsoft’s Sarathi-Serve, a research framework, remains vulnerable. SGLang, despite acknowledgement of our analysis from maintainers, have implemented incomplete fixes.

These are what we call Shadow Vulnerabilities: known issues that persist without CVEs, quietly waiting to be rediscovered by attackers.

RCE Demos

To show the real-world impact of ShadowMQ, we captured live demonstrations of RCE on both NVIDIA TensorRT-LLM and Modular Max. The videos below illustrate exactly how the flaw can be exploited in practice.

The Bigger Lesson: Copying Is Easy, Auditing Is Hard

Developers don’t copy vulnerable code because they’re careless. They do it because the ecosystem encourages it. In the open-source AI community, performance is king.

Projects are moving at incredible speed, and it’s common to borrow architectural components from peers. But when code reuse includes unsafe patterns, the consequences ripple outward fast.

Documentation rarely warns about the security risks of certain methods (recv_pyobj() being a perfect example).

Even code-generation tools like ChatGPT tend to repeat common examples, vulnerable or not, because they reflect real-world usage.

That’s how the same RCE vector can quietly end up in frameworks from Meta to NVIDIA.

We would like to thank the security teams at Meta, NVIDIA, vLLM, and Modular for fixing the vulnerabilities responsibly.

How to Stay Safe

- Patch immediately:

- Meta Llama Stack ≥ v0.0.41

- NVIDIA TensorRT-LLM ≥ v0.18.2

- vLLM ≥ v0.8.0

- Modular Max Server ≥ v25.6

- Don’t use pickle or recv_pyobj() with untrusted data.

- Add authentication (HMAC or TLS) to any ZMQ-based communication.

- Scan for exposed ZMQ endpoints and restrict their access from the network by binding on specific network interfaces. Avoid using “tcp://*” which exposes the socket on all network interfaces.

- Educate dev teams: serialization choices are security choices.

How Oligo Protects Against These Vulnerabilities

The key advantage of Oligo's runtime security platform comes from its ability to see deeper into runtime behavior in real-time than any other technology. Below are examples of how this visibility turns into actionable intelligence to protect against the collective vulnerabilities that make up ShadowMQ.

1. Uncover the vulnerable behavior at runtime

Our technology detects when pyzmq is running and when functions like recv_pyobj() are invoked. It also observes the subsequent call to pickle.loads in the same execution stack.

2. Identify malicious pickle payloads

Oligo can determine whether the pickled object being loaded results in code execution, process creation, or any other suspicious behavior — versus a legitimate use of pickle.

3. Detect exploitation regardless of how it’s triggered

Even if the attacker uses ZMQ without calling recv_pyobj(), or uses pickle in another way, Oligo can still catch combinations of ZMQ + pickle that lead to ShadowMQ-style exploitations.

Final Thoughts

ShadowMQ isn’t just a vulnerability. It’s a case study in how innovation and insecurity can spread together.

A single function in a single library ended up creating a chain of critical flaws across multiple frameworks, affecting some of the world’s most popular AI systems. All because one project copied another’s code without fully understanding the implications.

Security, especially in AI infrastructure, must be intentional. When we copy performance optimizations, we inherit their risks too.