This week, the Oligo Security research team announced the discovery of critical vulnerabilities (including CVE-2023-43654) that led to a full chain Remote Code Execution (RCE) and found thousands of vulnerable instances publicly exposed, including of some of the world’s largest organizations — open to unauthorized access and insertion of malicious AI models, and potentially a full server takeover. Mitigation steps and free tool to minimize exposure are suggested below.

UPDATE:

This week, the Oligo Security research team announced the discovery of new critical vulnerabilities that lead to remote code execution. Oligo found thousands of vulnerable instances publicly exposed, including of some of the world’s largest organizations — open to unauthorized access and insertion of malicious AI models, and potentially a full server takeover. This might affect millions of services and their end-users.

Today, AI models – from large language models (LLM) to computer vision – are booming, requiring us to trust AI-based applications with our most sensitive data in order to get the best answers and information, even for life-and-death scenarios – like preventing vehicle accidents, detecting cancer, and keeping public spaces safe. AI has even entered the realm of global conflict, used by armies and weapons worldwide.

But with great power comes great responsibility.

“Responsible AI” has become a hot topic, with governments around the world, including the White House, calling for AI safety and compliance frameworks.

Complicating the risk picture: today’s AI rests on a foundation of open source software (OSS), which comes with its own security issues. In 2021, we saw the White House issue an executive order regarding the creation and maintenance of SBOMs. Later that year, organizations got a tough lesson in the importance of understanding OSS library use, as the Log4j vulnerabilities impacted a staggering number of organizations and quickly became the most popular security flaw used by attackers.

The hottest name in AI frameworks today is PyTorch, which has completely dominated ML research and is gaining ground in private companies’ AI efforts. That’s why it shocked our researchers to discover that – with no authentication whatsoever – we could remotely execute code with high privileges, using new critical vulnerabilities in PyTorch open-source model servers (TorchServe).

These vulnerabilities make it possible to compromise servers worldwide. As a result, some of the world’s largest companies might be at immediate risk.

The PyTorch library is at the confluence of AI models and OSS libraries. One of the world’s most-used machine learning frameworks, PyTorch presents an attractive target to attackers who want to breach AI-based systems. In late 2022, attackers leveraged dependency confusion to compromise a PyTorch package, infecting it with malicious code.

TorchServe is among the most popular model-serving frameworks for PyTorch. Maintained by Meta and Amazon (and an official CNCF project with the Linux Foundation), the open-source TorchServe library is trusted by organizations worldwide, with over 30,000 PyPi downloads per month and more than a million total DockerHub pulls. It is dominant in the research world (over 90% of ML research papers now use PyTorch), and its commercial users include some of the world’s biggest companies, including Walmart, Amazon, OpenAI, Tesla, Azure, Google Cloud, Intel, and many more. TorchServe is also the base for projects such as KubeFlow, MLFlow, Kserve, AWS Neuron, and more. It is also offered as a managed service by the largest cloud providers, including SageMaker (AWS) and Vertex.AI (GCP).

Using a simple IP scanner we were able to find tens of thousands of IP addresses that are currently completely exposed to the attack – including many belonging to Fortune 500 organizations.

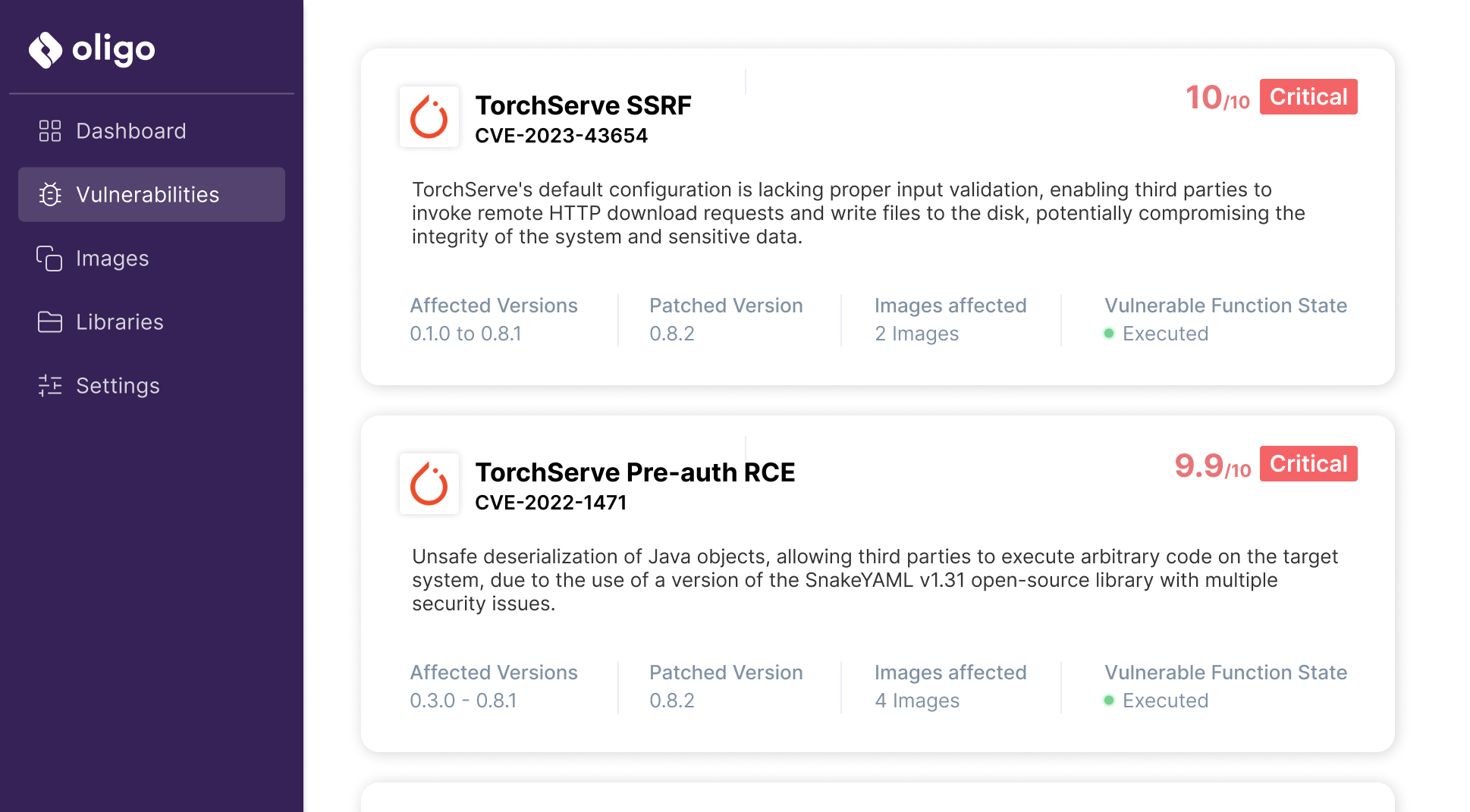

The vulnerabilities Oligo discovered impact all versions of TorchServe prior to 0.8.2. When used by a malicious actor, this chain of vulnerabilities results in remote code execution, allowing a complete takeover of the victim’s servers and networks and exfiltration of sensitive data.

By exploiting ShellTorch CVE-2023-43654, an attacker can execute code and take over the target server. This includes abusing an API misconfiguration that allows accessing the management console remotely without any authentication, exploiting a remote Server-Side Request Forgery (SSRF) vulnerability that allows uploading a malicious model that leads to code execution. Our research team has also found another unsafe deserialization vulnerability that can be triggered remotely, which exposes another attack vector to execute arbitrary code. By doing so, the attacker will obtain a way to infiltrate the network and use the resulting high privileges for lateral movement.

Using high privileges granted by these vulnerabilities, it is possible to view, modify, steal and delete AI models and sensitive data flowing into and from the target TorchServe server.

Let’s take a look at how these vectors combined to create ShellTorch: a perfect storm that might completely compromise the AI infrastructure of some of the world’s biggest businesses.

TorchServe exposes multiple interfaces, including a management API that allows managing models at runtime, in which we found the misconfiguration vulnerability.

The holy grail for attackers is the ability to infiltrate organizations from external networks. This means that by default, applications should be very cautious with the connections they expose outside the organization.

TorchServe’s documentation claims that the default management API can be accessed only from localhost by default.

In fact, the interface is bound to <span class="grey-span">0.0.0.0</span> by default, making it accessible to external requests, and opening the door to any malicious actor to attack the application.

<div class="mt-code-snippet">

<div><span class="orange-span">inference_address</span>=http://0.0.0.0:8080</div>

<div><span class="orange-span">management_address</span>=http://0.0.0.0:8081</div>

<div><span class="orange-span">metrics_address</span>=http://0.0.0.0:8082</div>

</div>

Changing the configuration from the default can fix the misconfiguration, but this configuration mistake appears as the default in the TorchServe installation guide, including in the default TorchServe docker.

As a result, the vulnerability is also present in Amazon’s and Google’s proprietary docker images by default, and present in self-managed services of the largest providers of machine learning services, including self-managed Amazon AWS SageMaker, self-managed Google Vertex AI and even KServe (the standard Model Inference Platform on Kubernetes), as well as many other projects built on TorchServe.

No Authentication - letting everyone in

This door for attackers is not just opened: it’s left unattended, letting everyone in, as the management interface lacks authentication, granting unrestricted access to any user.

These misconfigurations allow sending a request for uploading a malicious model from an attacker’s controlled address.

TorchServe is used to serve models in production. The models’ configuration files can then be fetched from a remote URL using the workflow/model registration API. While the API contains logic for an allowed list of domains, Oligo research team found that by default, all domains are accepted as valid URLs, resulting in an SSRF.

This means that an attacker can upload a malicious model that will be executed by the server, which results in arbitrary code execution.

Combined with the previous vulnerability, in which we proved that any attacker can access the management server from remote, this enables remote code execution without requiring any authentication on any default Torchserve server.

This can be used as an attack vector by itself, and can also be used to trigger another unsafe deserialization RCE vulnerability below [vulnerability #3].

As part of a bigger research project on insecure-by-design libraries that will soon be published, the Oligo research team identified that TorchServe is vulnerable to a critical RCE via the SnakeYAML deserialization vulnerability, caused by a misuse of the library.

AI models can include a YAML file to declare their desired configuration, so by uploading a model with a maliciously crafted YAML file, we were able to trigger an unsafe deserialization attack that resulted in code execution on the machine.

This combination of vulnerabilities allows us to remotely run code with high privileges without any authentication.

With this RCE exposed to external networks, we were able to compromise TorchServe servers around the world. We were able to find tens of thousands of IP addresses that are currently completely exposed to the attack.

Once an attacker can breach an organization’s network by executing code on its PyTorch server, they can use it as an initial foothold to move laterally to infrastructure in order to launch even more impactful attacks, especially in cases where proper restrictions or standard controls are not present.

But lateral movement may not even be necessary: using ShellTorch, the attackers are already in the core of the AI infrastructure, allowing them to gain and leverage TorchServe’s high privileges in order to view, modify, steal, and delete AI models, which often contain a business’s core IP. Making these vulnerabilities even more dangerous: when an attacker exploits the model serving server, they can access and alter sensitive data flowing in and out from the target TorchServe server, harming the trust and credibility of the application.

2023 has been dubbed “The Year of AI.” The pace of innovation in AI is fast, and the competition is fierce.

Open source tools and libraries are now fundamental to the dynamic growth of AI production frameworks and the ML ops landscape. While open-source components have driven innovation and accelerated development, their integration into AI production environments creates the potential for massive security risks.

The combination of an AI industry in hypergrowth and easy-to-use open-source tools creates a precarious balance between innovation and vulnerabilities, exposing systems to potentially critical threats (like those we have seen in this blog).

The vulnerabilities Oligo found can be considered a real-world example of multiple security risks cited in the recent OWASP Top 10 for LLM Applications, including:

As we can see from the ShellTorch vulnerabilities, organizations can put themselves at significant risk — potentially exposing their systems to critical threats — even when they trust only projects that are widely public, sustainable, and maintained by the top software companies in the world. Although TorchServe is maintained by Amazon and Meta, these vulnerabilities have been present in all versions, while some managed services have added compensating controls that mitigate the exposure.

Using these tools as a service hosted by large, trusted companies is also no guarantee against vulnerabilities and unsafe configurations: the default Torchserve configurations for cloud providers such as GKE, AKS, and EKS, as well as open-source projects and frameworks, all used the default unsafe configuration that made this chained attack possible.

Even the default self-managed Deep Learning Container (DLC) by Amazon and Google have been found to be vulnerable to ShellTorch. Amazon has recently updated its DLC and issued a security advisory for the users regarding ShellTorch. The managed services of Amazon and Google include compensating controls that reduce the exposure.

Of course, the most important question to most security practitioners is: how do we protect our systems from ShellTorch if we are TorchServe users?

With tens of thousands of impacted instances, it’s critical to identify whether your organization may be using the affected versions of TorchServe – and give you specific steps on how to mitigate your risk.

To find out fast, use our free tool.

We’re also hosting a threat briefing where we’ll go over the potential impacts of ShellTorch, as well as essential mitigation steps.

As adversaries set their sights on AI and LLMs, organizations need a way to keep production environments safe more than ever, even when using untrusted, third-party code.

But how?

Oligo has built a security solution capable of identifying real, exploitable threats in a runtime context. By analyzing the context in which libraries are being used, Oligo can uncover sources of undesired or insecure application behavior that other tools fail to detect.

Unlike static analysis solutions, Oligo detects anomalies at runtime in any code — whether that code came from open-source libraries, proprietary third-party software, or was custom-written — and can also detect sources of risk including insecure configuration settings.

When vulnerabilities and security flaws are detected, Oligo also allows low-disruption fixes that don’t require full patching or version updates to implement.

We would like to thank the security teams of AWS & Meta for their dedication and working closely with our research team to ensure all users are safe.

We would also like to thank PyTorch maintainers from Meta & Amazon for building this amazing OSS project, and for their fast response and incorporating security together with usability to all users.

Oligo helps organizations focus on true exploitability, streamlining security processes without hindering developer productivity.